A few months ago, we were approached by INCG Media, a media brand from Taiwan that focuses on computer graphics and AI. They wanted to discuss our developments in archviz and AI. The edited interview was published in their September issue in Chinese. However, you can read the full interview with us here in English.

We provide a glimpse into our journey as developers in the architecture industry. We discuss the founding of Pulze and its focus on custom software services, as well as the development of tools like Scene Manager and Render Manager. Additionally, we share our experiences with AI-generated content and our vision for the future of architectural visualization. Join us as we explore the exciting intersection of automation, AI, and design with Pulze.

1. Hello Peter and Mihály, can you briefly introduce yourself?

Peter: I’m one of the co-founders and developers at Pulze. I have a passion for 3D and coding, and I very much enjoy the niche where these collide. After finishing high school, I started diving into architectural visualization, and did a few years of freelancing before joining Brick Visual in 2013. Initially, I was hired as a 3D artist to produce still images. However, over the years, my position evolved significantly. I began working on animations, and started to produce internal tools for the team. Eventually, I shifted my focus to development, which led us to found Pulze.

Mihály: I had a very similar life journey up to this point, with the difference that my point of entry was more centered around VFX, design, sound engineering and 2D animation.

2. Next can you tell us about Pulze? Like the circumstances in which Pulze was initially founded and why it focused on developing custom software services for the architecture industry?

P: We have always recognized the need to automate or expand certain processes to create better images and animations. This led us to create solutions for ourselves, which we soon realized could benefit the entire industry.

When we joined Brick in 2013, it was a relatively small company with only 12 employees. As the company grew over the next few years, we faced many new challenges: more artists, bigger projects, and tighter deadlines.

We encountered problems for which off-the-shelf solutions were unavailable. Because we both had an interest in coding, we delved into the world of scripting for 3ds Max, Photoshop, and After Effects.

After a few months of learning, we produced some useful tools for ourselves and our team. The main goal of these scripts was to simplify daily work for the artists, making tasks faster and less prone to critical errors.

This was a great success, and we enjoyed it so much that we gradually stepped back from production to focus solely on development. In 2017, Brick established an R&D department, which gave us the opportunity to experiment with new things and play with the latest technology. It was during this time that we began building the first versions of the Pulze products.

M: Honestly at the beginning we didn’t had any intention to run a software business, but throughout the year we fell in love with this activity. Luckily we had a really good position in the R&D group of Brick where we gained many experience and insights on the industry. Working at a company with 50+ 3D artist gave us a huge advantage and we could quickly see the missing pieces and what are the things we should focus on. This all led to formation of Pulze and the products.

3. By the way, did you also develop tools like Scene, Render, and Post Manager in response to the pain points commonly faced by architects and designers?

M: Indeed, after gaining deeper knowledge and confidence in coding, we wanted to tackle some of the bigger problems that 3D artists were facing in the archviz industry.

Our first larger internal development was the Scene Manager, which we released to the public as a commercial tool in 2018. The Scene Manager allows artists to create multiple states with different resolutions, lighting, output, and render settings in the same scene. This provides an easy-to-use playground where artists can try out different compositions and settings, and easily save and retrieve their ideas.

P: While the Scene Manager has a built-in batch render feature, it cannot utilize the entire capacity of an office. This is where our network rendering tool, Render Manager, comes into play. For a long time, we used Backburner to send jobs to the render farm, but while it was a solid choice for smaller animations, it lacked many features such as hardware monitoring and distributed rendering. It was also difficult to maintain and control in a medium-sized studio. This inspired us to fill the gap and create Render Manager.

Finally, after rendering your scene, you may want to fine-tune your image in Photoshop using some render elements. Updating Photoshop files with the latest elements for 5th iteration felt like something that should be automated. This is where the Post Manager comes into the picture.

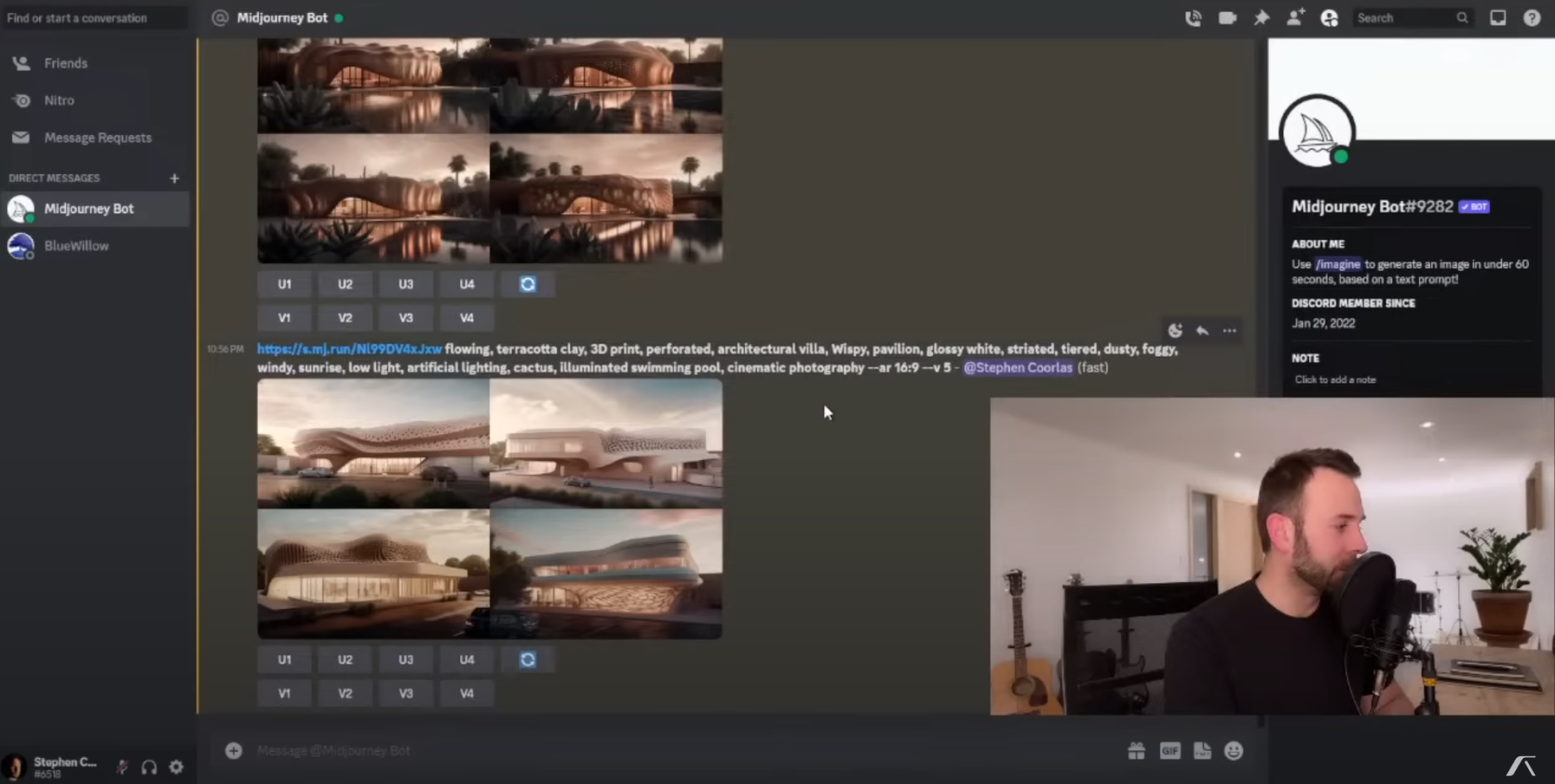

4. In Taiwan, the rise of AIGC is largely attributed to the efforts of Midjourney. Can you share with us how you initially became involved in the realm of AIGC?

M: When Midjourney was first released, it was like a hurricane. We have realized that the state of AI is no longer some nerdy whitepaper and far-future possibilities, but it’s very real, and already in our doorsteps. After the introduction of ChatGPT 3.5 and 4, and Midjourney v4, then v5 we saw that this is no longer a fancy new toy, but a serious force that should be utilized.

P: Honestly, for a long time I avoided Midjourney and other image generation solutions. However, throughout the year, while talking to our customers and visiting industry conferences, it became clear that many people are trying to incorporate these solutions into their daily workflow. This was very exciting, and eventually, I became interested in it as well. I often found myself playing with prompts and creating random images, which is a bit addictive.

Midjourney - Image from Stephen Coorlas’s Youtube channel

5. Providing “highly automated processes” is a key objective for your services. From your perspective, what benefits can automated processes and AIGC bring to designers in their workflow?

P: Currently, Midjourney or Stable Diffusion can be used as inspiration tools or by incorporating some parts of the generated image, such as the background, foreground, or characters. Additionally, the AI features in Photoshop can greatly boost workflow in some cases.

However, the problem with most AI solutions, whether a language model or image generator, is that they cannot produce a constant and predictable result. Often, you may find yourself writing prompts and trying to get the result you are looking for instead of doing it manually.

While AI is powerful in some areas, it is not suitable for everything and people should be aware of its limitations.

Artifacts, hallucinations and unwanted results

M: Apart from the image generation tool (Project Dream), we are experimenting with a few non-product-related projects. For instance, our documentation and support tickets could be processed by a language model, leading to faster support responses and easing our workload.

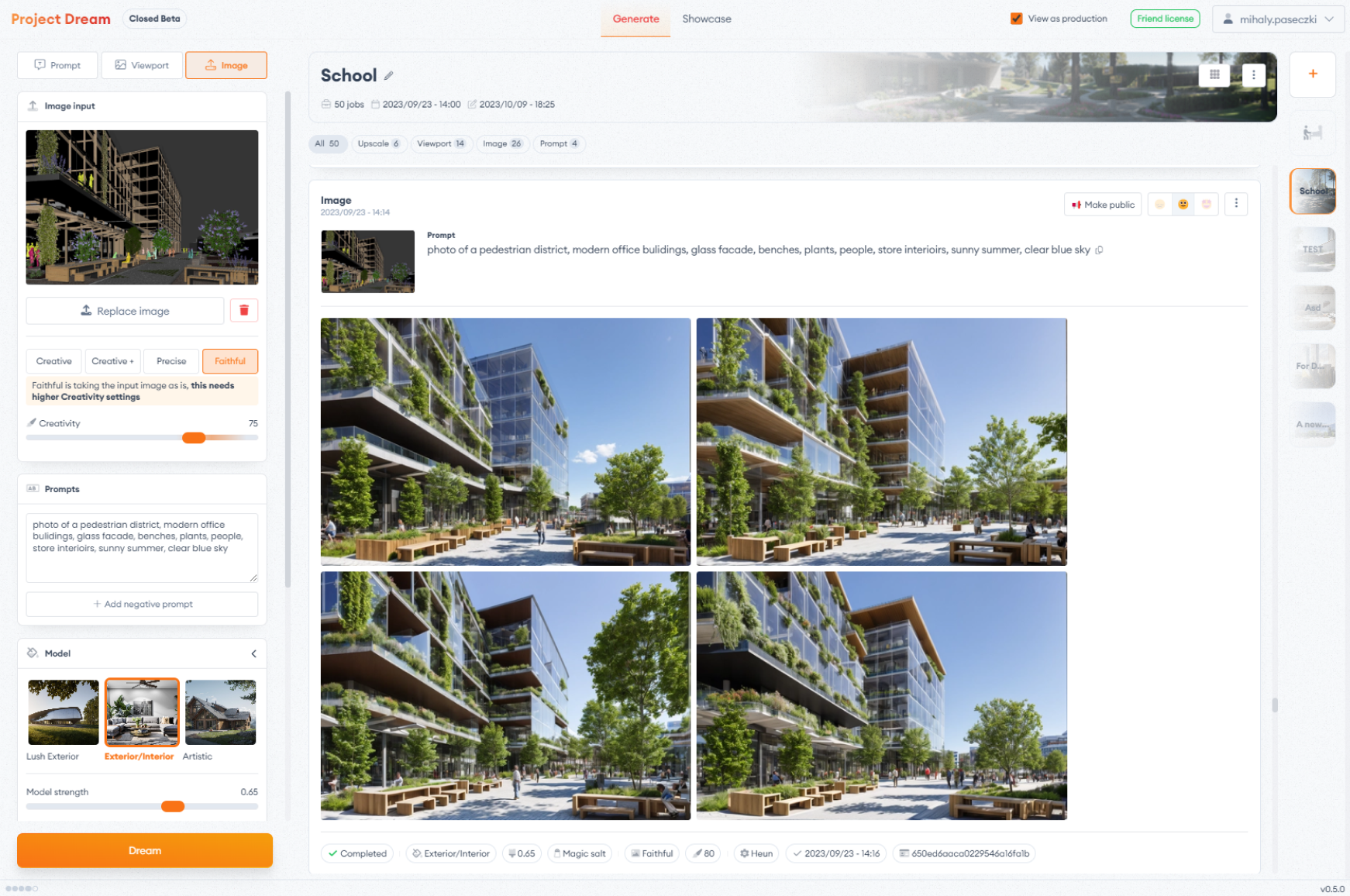

6. Can you simply tell us about the AI tool Project Dream and what is the purpose of developing it?

M: Sure! It’s an AI image generation tool that captures your 3D application’s viewport and generates high-quality architectural visualization images while preserving your composition and most of your geometry. It’s an excellent way to gain inspiration for moods and color palettes during the early stages of a visualization project. You could also use some parts of the generated images in post-production.

The user interface of Pulze - Project Dream

7. Aside from Project Dream, is there any AIGC workflow or tools that are already implemented in your daily basics? No matter is text generated or any types of AIGC workflow to help you develop tools?

M: We are experimenting with ChatGPT to generate ad copy and blog content ideas. However, we feel that the results are a bit too robotic and lack the human touch that we strive for in our content and communication. Nevertheless, we use GitHub Copilot and Copilot Chat daily for development, which provides a significant productivity boost.

8. In Taiwan, some interior designer, architects use AIGC to generate references, idea for their designs. In your vision, are there any expectations for how AIGC can further impact archviz in the future?

M: For photo bashing / matte painting, and inspiration, sure. But for delivering final content, I think we are not there yet. In our mind, the impact of AI will generate a sharp threshold, where you’ll have a huge amount of mediocre / acceptable / ok images, but high-end human made plans and images will still be in high demand.

P: We can see that receiving AI generated references from designers and architects is becoming more frequent. It is also common to receive Midjourney images that the 3D artist need to reproduce which if you think about it is kinda crazy.

9. Accordingly, have you known other architects or companies that are using AI tools in their workflow?

M: We have some clients and friends who are using different image generation methods for inspiration, but I think that everyone is at least playing around with it. Once the initial anxiety goes away, everyone gets curious.

P: Yeah I think most of the people in our industry has tried it out at least once. For freelancers and smaller companies it is probably a no brainier and they use it for all sorts of projects. For larger firms it is more tricky as they are not allowed to utilize it because of the copyright issues.

10. Do you have concerns about artists becoming overly reliant on AIGC tools and their design thinking becoming stagnant? What are your thoughts on this?

M: As I’ve said earlier, with these tools becoming more accessible, the amount of these medium quality “generated content” will become overwhelming, and potentially boring. It will make great artists be able stand out, and be in demand, to create new and original content.

P: This is definitely going to be a problem. We can already see a lot of ChatGPT-style blog posts and typical Midjourney images everywhere. However, as Mihály mentioned, there will always be a need for high-end original content.

Example of the images created with Project Dream

11. Furthermore, do you have any other concerns toward AIGC, for example the copyrighted, private issues?

M: As I’m aware, the copyright laws are still behind this issue at the moment. We believe that training a model with an artist’s images, could be considered “transformative use”, as it is not simply reproducing the copyrighted work, but is instead using it to create something new. That being said, we respect the artist’s decision, if they don’t want their art to be used in AI training. We are only working with training data that we’ve explicitly got permission to use.

P: We are trying to be as up to date with this as possible. For example one of our goals with Project Dream is to have a very clear and fair end user license agreement which should be acceptable and usable for freelancers all the way to large enterprises.

12. As a developer, what are your expectations for AIGC in the near future?

M: We are super excited about the implementation of AI in the development area. ChatGPT and GitHub Copilot are both great help, and speeds up our development greatly. They make us able to deliver features and products faster, and more efficiently.

P: I’m really excited about what Stable Diffusion, Midjourney, OpenAI and other new players will bring us in the near future. These are really exciting times!